Device Decodes ‘Internal Speech’ in the Brain

Technology that enables researchers to interpret brain signals could one day allow people to talk using only their thoughts

Kateryna Kon/Science Photo Library

Scientists have developed brain implants that can decode internal speech — identifying words that two people spoke in their minds without moving their lips or making a sound.

Although the technology is at an early stage — it was shown to work with only a handful of words, and not phrases or sentences — it could have clinical applications in future.

Similar brain–computer interface (BCI) devices, which translate signals in the brain into text, have reached speeds of 62–78 words per minute for some people. But these technologies were trained to interpret speech that is at least partly vocalized or mimed.

On supporting science journalism

If you’re enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

The latest study — published in Nature Human Behaviour on 13 May — is the first to decode words spoken entirely internally, by recording signals from individual neurons in the brain in real time.

“It’s probably the most advanced study so far on decoding imagined speech,” says Silvia Marchesotti, a neuroengineer at the University of Geneva, Switzerland.

“This technology would be particularly useful for people that have no means of movement any more,” says study co-author Sarah Wandelt, a neural engineer who was at the California Institute of Technology in Pasadena at the time the research was done. “For instance, we can think about a condition like locked-in syndrome.”

Mind-reading tech

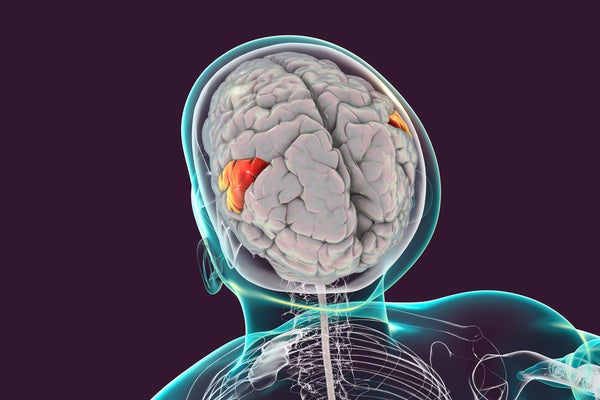

The researchers implanted arrays of tiny electrodes in the brains of two people with spinal-cord injuries. They placed the devices in the supramarginal gyrus (SMG), a region of the brain that had not been previously explored in speech-decoding BCIs.

Figuring out the best places in the brain to implant BCIs is one of the key challenges for decoding internal speech, says Marchesotti. The authors decided to measure the activity of neurons in the SMG on the basis of previous studies showing that this part of the brain is active in subvocal speech and in tasks such as deciding whether words rhyme.

Two weeks after the participants were implanted with microelectrode arrays in their left SMG, the researchers began collecting data. They trained the BCI on six words (battlefield, cowboy, python, spoon, swimming and telephone) and two meaningless pseudowords (nifzig and bindip). “The point here was to see if meaning was necessary for representation,” says Wandelt.

Over three days, the team asked each participant to imagine speaking the words shown on a screen and repeated this process several times for each word. The BCI then combined measurements of the participants’ brain activity with a computer model to predict their internal speech in real time.

For the first participant, the BCI captured distinct neural signals for all of the words and was able to identify them with 79% accuracy. But the decoding accuracy was only 23% for the second participant, who showed preferential representation for ‘spoon’ and ‘swimming’ and had fewer neurons that were uniquely active for each word. “It’s possible that different sub-areas in the supramarginal gyrus are more, or less, involved in the process,” says Wandelt.

Christian Herff, a computational neuroscientist at Maastricht University in the Netherlands, thinks these results might highlight the different ways in which people process internal speech. “Previous studies showed that there are different abilities in performing the imagined task and also different BCI control abilities,” adds Marchesotti.

The authors also found that 82–85% of neurons that were active during internal speech were also active when the participants vocalized the words. But some neurons were active only during internal speech, or responded differently to specific words in the different tasks.

Next steps

Although the study represents significant progress in decoding internal speech, clinical applications are still a long way off, and many questions remain unanswered.

“The problem with internal speech is we don’t know what’s happening and how is it processed,” says Herff. For example, researchers have not been able to determine whether the brain represents internal speech phonetically (by sound) or semantically (by meaning). “What I think we need are larger vocabularies” for the experiments, says Herff.

Marchesotti also wonders whether the technology can be generalized to people who have lost the ability to speak, given that the two study participants are able to talk and have intact brain speech areas. “This is one of the things that I think in the future can be addressed,” she says.

The next step for the team will be to test whether the BCI can distinguish between the letters of the alphabet. “We could maybe have an internal speech speller, which would then really help patients to spell words,” says Wandelt.

This article is reproduced with permission and was first published on May 13, 2024.