Bilingual AI Brain Implant Allows Stroke Survivor Converse in Equally Spanish and English

A initially-of-a-variety AI method permits a human being with paralysis to communicate in two languages

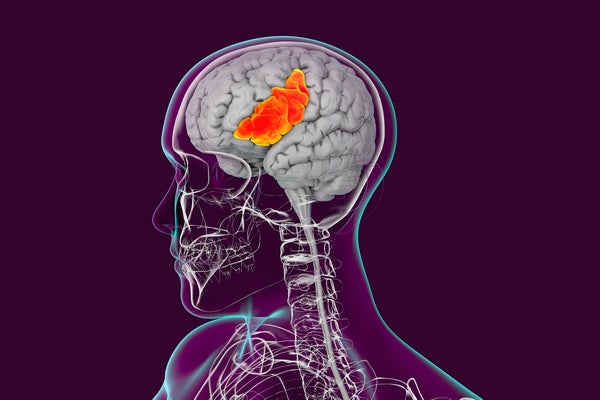

Human mind with highlighted inferior frontal gyrus, element of the prefrontal cortex and the locale of Broca’s place, which is concerned in language processing and speech production.

Kateryna Kon/Science Photo Library/Getty Images

For the to start with time, a mind implant has served a bilingual person who is unable to articulate words to converse in equally of his languages. An artificial-intelligence (AI) procedure coupled to the brain implant decodes, in actual time, what the personal is hoping to say in possibly Spanish or English.

The results, released on 20 Might in Nature Biomedical Engineering, supply insights into how our brains system language, and could just one working day lead to prolonged-long lasting units able of restoring multilingual speech to individuals who just cannot communicate verbally.

“This new research is an crucial contribution for the emerging industry of speech-restoration neuroprostheses,” says Sergey Stavisky, a neuroscientist at the University of California, Davis, who was not involved in the analyze. Even however the research integrated only a single participant and a lot more perform remains to be finished, “there’s every single cause to think that this method will work with greater precision in the long term when blended with other recent advances”, Stavisky suggests.

On supporting science journalism

If you might be experiencing this post, take into consideration supporting our award-successful journalism by subscribing. By paying for a subscription you are encouraging to ensure the upcoming of impactful stories about the discoveries and concepts shaping our globe nowadays.

Speech-restoring implant

The particular person at the coronary heart of the analyze, who goes by the nickname Pancho, had a stroke at age 20 that paralysed considerably of his physique. As a result, he can moan and grunt but can not communicate obviously. In his thirties, Pancho partnered with Edward Chang, a neurosurgeon at the College of California, San Francisco, to examine the stroke’s long lasting outcomes on his brain. In a groundbreaking analyze revealed in 2021, Chang’s crew surgically implanted electrodes on Pancho’s cortex to file neural exercise, which was translated into phrases on a display.

Pancho’s initial sentence — ‘My spouse and children is outside’ — was interpreted in English. But Pancho is a indigenous Spanish speaker who learnt English only after his stroke. It is Spanish that however evokes in him thoughts of familiarity and belonging. “What languages an individual speaks are essentially really connected to their id,” Chang suggests. “And so our long-phrase objective has by no means been just about changing terms, but about restoring connection for individuals.”

To accomplish this aim, the group developed an AI technique to decipher Pancho’s bilingual speech. This hard work, led by Chang’s PhD student Alexander Silva, concerned schooling the technique as Pancho experimented with to say virtually 200 words. His initiatives to form every single word developed a distinct neural pattern that was recorded by the electrodes.

The authors then utilized their AI method, which has a Spanish module and an English one, to phrases as Pancho tried to say them aloud. For the initial word in a phrase, the Spanish module chooses the Spanish phrase that matches the neural pattern best. The English part does the exact, but chooses from the English vocabulary alternatively. For example, the English module might pick out ‘she’ as the most probably first phrase in a phrase and assess its chance of being right to be 70%, whilst the Spanish 1 may well select ‘estar’ (to be) and measure its probability of remaining accurate at 40%.

Term for phrase

From there, both modules endeavor to develop a phrase. They every single pick the 2nd word centered on not only the neural-sample match but also no matter whether it is likely to follow the very first one. So ‘I am’ would get a larger likelihood rating than ‘I not’. The closing output makes two sentences — a person in English and a person in Spanish — but the show screen that Pancho faces shows only the variation with the highest complete likelihood score.

The modules were being capable to distinguish involving English and Spanish on the foundation of the initial word with 88% precision and they decoded the suitable sentence with an precision of 75%. Pancho could eventually have candid, unscripted discussions with the investigate staff. “After the initial time we did a person of these sentences, there have been a few minutes exactly where we have been just smiling,” Silva suggests.

Two languages, 1 brain region

The findings uncovered unpredicted facets of language processing in the brain. Some earlier experiments employing non-invasive resources have suggested that distinctive languages activate distinctive parts of the brain. But the authors’ evaluation of the signals recorded instantly in the cortex identified that “a great deal of the exercise for both Spanish and English was essentially from the identical area”, Silva claims.

Furthermore, Pancho’s neurological responses did not appear to be to differ significantly from those people of small children who grew up bilingual, even though he was in his thirties when he learnt English — in contrast to the effects of previous studies. With each other, these findings suggest to Silva that distinct languages share at least some neurological attributes, and that they could possibly be generalizable to other persons.

Kenji Kansaku, a neurophysiologist at Dokkyo Medical University in Mibu, Japan, who was not included in the research, suggests that in addition to incorporating contributors, a future move will be to analyze languages “with pretty diverse articulatory properties” to English, these types of as Mandarin or Japanese. This, Silva states, is some thing he’s already hunting into, along with ‘code switching’, or the shifting from one language to yet another in a solitary sentence. “Ideally, we’d like to give men and women the ability to talk as by natural means as attainable.”

This write-up is reproduced with authorization and was 1st released on May perhaps 21, 2024.