Scientists are warning that facial recognition systems are “more threatening than previously assumed” and pose “major challenges to privateness” following a research discovered that artificial intelligence can be prosperous in predicting a person’s political orientation based mostly on photos of expressionless faces.

A the latest study printed in the journal American Psychologist suggests an algorithm’s capacity to precisely guess one’s political views is “on par with how well task interviews predict occupation accomplishment, or alcohol drives aggressiveness.” Direct creator Michal Kosinski instructed Fox Information Electronic that 591 individuals loaded out a political orientation questionnaire prior to the AI captured what he described as a numerical “fingerprint” of their faces and compared them to a databases of their responses to predict their views.

“I believe that people today never know how much they expose by simply putting a photo out there,” claimed Kosinski, an affiliate professor of organizational actions at Stanford University’s Graduate Faculty of Company.

“We know that people’s sexual orientation, political orientation, religious views need to be protected. It applied to be unique. In the previous, you could enter anybody’s Facebook account and see, for case in point, their political sights, the likes, the pages they comply with. But many a long time back, Facebook shut this simply because it was apparent for policymakers and Facebook and journalists that it is just not satisfactory. It is as well perilous,” he ongoing.

GOOGLE CONSOLIDATES AI-Focused DEEPMIND, Investigation Teams

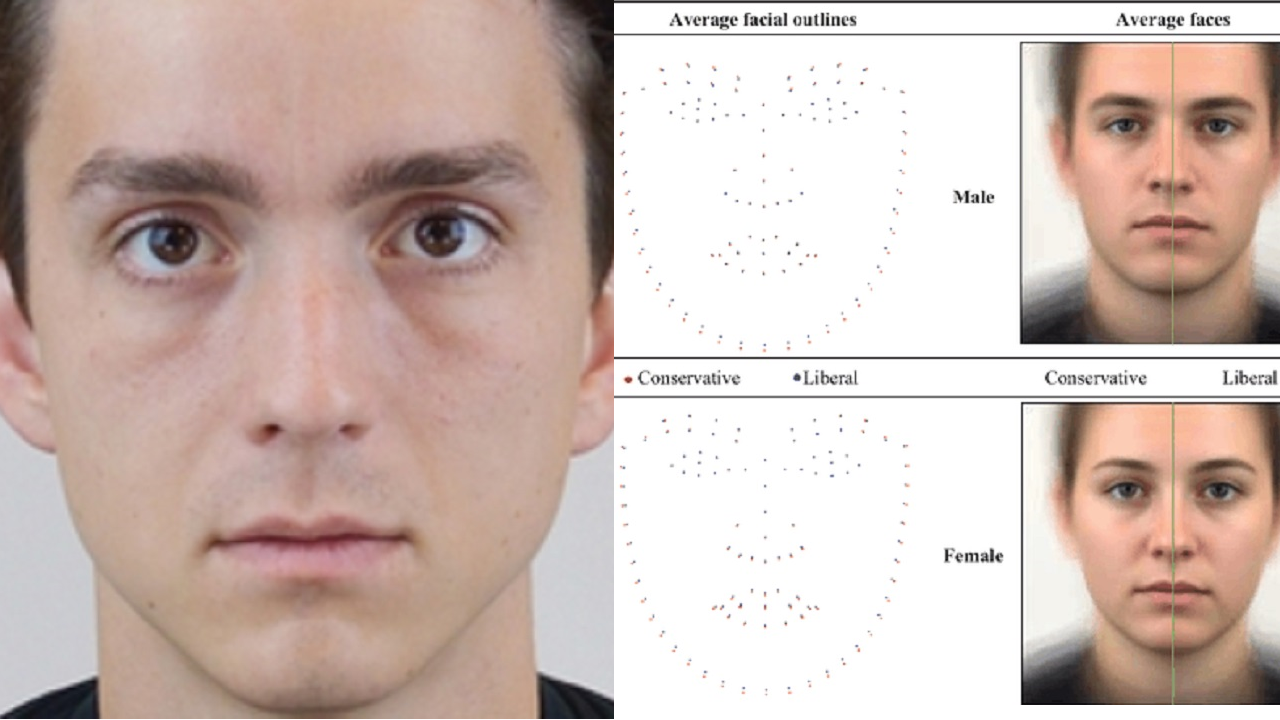

The study used AI to predict people’s political orientation centered on visuals of expressionless faces.

“But you can nonetheless go to Fb and see anybody’s picture. This person never fulfilled you, they in no way authorized you to seem at a photograph, they would hardly ever share their political orientation … and still, Facebook exhibits you their photograph, and what our study displays is that this is essentially to some extent the equal to just telling you what their political orientation is,” Kosinski included.

For the review, the authors explained the photographs of the contributors ended up collected in a really managed manner.

“Participants wore a black T-shirt adjusted employing binder clips to cover their outfits. They removed all jewellery and – if necessary – shaved facial hair. Experience wipes have been employed to eliminate cosmetics until eventually no residues had been detected on a fresh wipe. Their hair was pulled back making use of hair ties, hair pins, and a headband whilst using treatment to avoid flyaway hairs,” they wrote.

The facial recognition algorithm VGGFace2 then examined the photos to figure out “confront descriptors, or a numerical vector that is equally exclusive to that specific and reliable throughout their distinctive images,” it explained.

AIR Force CONFIRMS First Successful AI DOGFIGHT

AI can reportedly forecast political orientations from blank faces. (Jakub Porzycki/NurPhoto by using Getty Illustrations or photos/File)

“Descriptors extracted from a specified graphic are in contrast to those people stored in a database. If they are related more than enough, the faces are regarded a match. Here, we use a linear regression to map confront descriptors on a political orientation scale and then use this mapping to forecast political orientation for a formerly unseen experience,” the research also reported.

The authors wrote that their results “underscore the urgency for scholars, the community, and policymakers to identify and tackle the opportunity hazards of facial recognition technological innovation to own privacy” and that an “examination of facial options affiliated with political orientation exposed that conservatives tended to have bigger decrease faces.”

“Potentially most crucially, our findings counsel that widespread biometric surveillance systems are more threatening than previously believed,” the analyze warned. “Past investigate showed that naturalistic facial photos convey information about political orientation and other personal characteristics. But it was unclear whether or not the predictions had been enabled by self-presentation, stable facial characteristics, or both of those. Our outcomes, suggesting that secure facial capabilities express a considerable sum of the sign, suggest that people today have significantly less handle around their privateness.”

Kosinski informed Fox News Digital that “algorithms can be really effortlessly utilized to millions of individuals extremely rapidly and cheaply” and that the study is “extra of a warning tale” about the technological innovation “that is in your phone and is extremely widely employed almost everywhere.”

AI can reportedly predict political orientations from blank faces. (Visions of The us/Common Illustrations or photos Group by means of Getty Photos/File)

Click Right here TO GET THE FOX Information App

The authors concluded that “even crude estimates of people’s character traits can appreciably improve the efficiency of on the net mass persuasion strategies” and that “scholars, the public, and policymakers really should just take recognize and look at tightening guidelines regulating the recording and processing of facial photos.”