The yr 2024 is one of the greatest election a long time in background, with billions of men and women likely to the polls around the world. Whilst elections are regarded as safeguarded, interior point out affairs under international law, election interference in between nations has nonetheless risen. In distinct, cyber-enabled influence operations (CEIO) through social media—including disinformation, misinformation or “fake news”—have emerged as a singular menace that states want to counter.

Impact ops originally skyrocketed into public awareness in the aftermath of Russian election interference in the 2016 U.S. presidential election. They have not since waned. Scientists, plan makers and social media firms have developed various methods to counter these CEIOs. Nevertheless, that calls for an knowledge, typically lacking, of how these functions operate in the first place.

Information and facts, of class, has been applied as a software of statecraft in the course of record. Sunshine Tzu proposed additional than 2,000 several years back that the “supreme art of war” is to “subdue the enemy with out fighting.” To that end, information can influence, distract or convince an adversary that it is not in their finest curiosity to combat. In the 1980s, for instance, the Soviet Union initiated Operation Infektion/Operation Denver aimed at spreading the lie that AIDS was created in the U.S. With the emergence of cyberspace, these kinds of impact operations have expanded in scope, scale and pace.

On supporting science journalism

If you’re savoring this post, contemplate supporting our award-profitable journalism by subscribing. By acquiring a subscription you are aiding to ensure the foreseeable future of impactful tales about the discoveries and strategies shaping our environment currently.

Visions of cybergeddon—including catastrophic cyber incidents wreaking havoc on communications, the power grid, waters provides and other critical infrastructure, ensuing in social collapse—have captured public creativity and fueled a great deal of the plan discourse (which include references to a “cyber Pearl Harbor”).

But, cyber operations can choose an additional form, targeting the human beings guiding the keyboards, rather than machines by means of code manipulation. This kind of activities done via cyberspace can be aimed at altering an audiences’ imagining and perceptions, with the aim to in the long run modify their behavior. Arranging political rallies in an adversary point out would be an case in point of mobilization (behavioral modify). As this sort of, contemporary cyber-enabled influence functions stand for a continuation of global levels of competition short of armed conflict. As opposed to offensive cyber functions that could hack networked units, shut down a pipeline or interrupt communications, they target on “hacking” human minds. That arrives in especially useful when a overseas energy wants to meddle in an individual else’s domestic politics.

CEIOs operate by setting up self-sustaining loops of details that can manipulate public opinion and gas polarization. They are “a new form of “divide and conquer” applied to geopolitical problem of levels of competition fairly than in war,” I a short while ago argued with a co-author in the Intelligence and Nationwide Security journal.

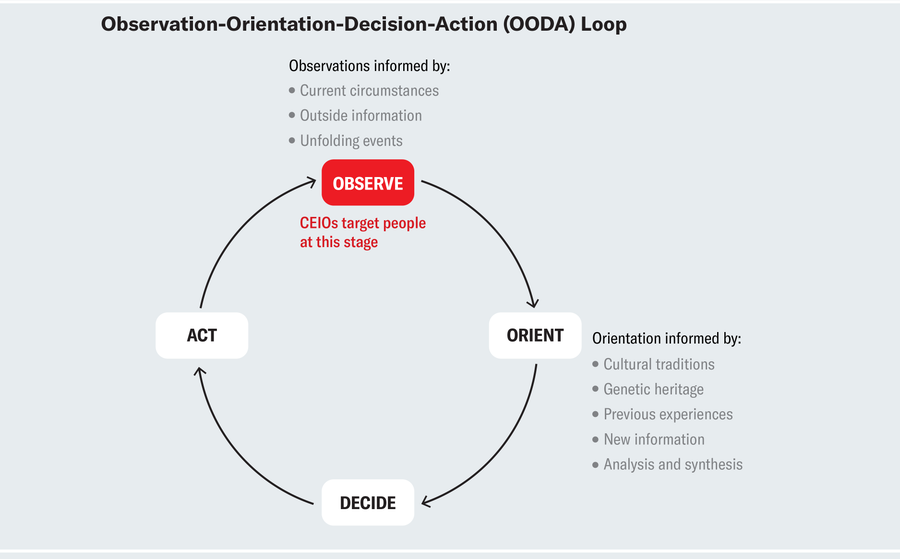

But how do these operations operate? In purchase to fully grasp that, we can use the armed forces strategy of the observation-orientation-final decision-motion (OODA) loop, which is extensively made use of in preparing tactic and strategies. The design assists reveal how people today arrive at decisions appropriate to day by day daily life: individuals in a culture (us) just take facts from our surroundings (observation) and make strategic choices as a end result. In the case of a dogfight (close-vary aerial struggle), the strategic decision would guide to pilot’s survival (and army victory). In day-to-working day existence, a strategic choice may be picking out the political leader that would most effective characterize our very own interests. Here, shifting what is being noticed by injecting further information into the observation element of the OODA loop can have substantial repercussions. CEIOs goal to do specifically that, by feeding different areas of the public with qualified messages at the observation stage aimed to in the end change their steps.

Amanda Montañez Source: A Discourse on Profitable and Getting rid of, by John R. Boyd Air University Push, 2018

So how do CEIOs interrupt the OODA loop using social media? Cyber-enabled affect functions perform on typical ideas that can be recognized as a result of an identification-imitation-amplification framework. Initially, “outsiders” (malicious actors) detect focus on audiences and divisive issues through social media microtargeting.

Subsequent this, the “outsiders” might pose as associates of the focus on audience by assuming fake identities, via imitation which boosts their believability. An instance is the 2016 Russian CEIO on Facebook, which bought advertisements to focus on U.S. audiences, manned by the infamous “troll factories” in St. Petersburg. In these advertisements, trolls assumed false identities and used the language that recommended that they belonged to the focused society. Then they consider to obtain affect as a result of messages designed to resonate with target audiences, selling a feeling of in-team belonging (at the cost of any much larger assumed neighborhood, these as the nation). This sort of messages could choose the form of regular disinformation, but may perhaps also make use of factually suitable data. For this motive, making use of the expression CEIO, in its place of “disinformation,” “misinformation” or “fake information,” may well provide additional analytical precision.

Finally, tailored messages are amplified, each in articles (escalating and diversifying the selection of messages) and by increasing the number of goal groups. Amplification can also arise by means of cross-platform posting.

Take into consideration an illustration: a article by a not too long ago enlisted soldier who recounts their lived knowledge of looking at September 11th on Tv set and says that it was this function that enthusiastic them to sign up for the army. This is a put up that was shared by the Veterans Across the Country Fb site. The content material of this put up could be solely fabricated, and VAN might not exist “in true life.” Even so, these kinds of a article, although fictional, can have the emotive effects of authenticity, significantly in how it is visually presented on a electronic media system. And it can be leveraged for reasons other than promoting a shared sense of patriotism, relying on to what content material it turns into linked and amplified as a result of.

In its CEIO utilizing Fb, the Russian Internet Exploration Company qualified diverse domestic audiences in the U.S. by means of exclusively crafted messaging aimed at interfering in the 2016 election, as recounted in the Mueller Report.Reportedly, 126 million People in america had been uncovered to Russian initiatives to influence their sights, and their votes, on Fb. Distinct audiences heard distinctive messages, with teams recognized employing Facebook’s menu-style microtargeting attributes. Evidence reveals that the majority of Russian-purchased ads on Fb qualified African Us citizens with messages that did not essentially include fake information, but instead focused on matters like race, justice and law enforcement. Over and above the U.S., Russia reportedly targeted Germany, as very well as the U.K.

To successfully make strategic choices, folks have to correctly notice their environments. If the noticed fact is filtered as a result of a manipulated lens of divisiveness, manageable factors of social disagreement (expected in healthy democracies) can be turned into probably unmanageable divisions. For case in point, the well-known emphasis on polarization in the U.S. ignores the reality that most people are not as politically polarized as they imagine. Rely on in institutions can be undermined, even without having the support of “fake information.”

As the identification-imitation-amplification framework highlights, the problem of who can legitimately take part in debates and search for to impact public view is of vital value and has a bearing on what is the “reality” that is getting observed. When engineering allows outsiders (international malicious actors) to credibly pose as reputable associates of a specific modern society, the possible challenges of manipulation improve.

With about 49 percent of the worldwide inhabitants taking part in elections in 2024, we must counter CEIOs and election interference. Knowledge imitation, identification and amplification is just the starting up stage. Overseas affect operations will employ truthful info to sway general public viewpoint. We must imagine deeply about what that implies for domestic political affairs. In addition, if cyberspace delivers access and anonymity facilitating impact operations aimed at swaying audiences and influencing elections, democracies need to properly limit that access to authentic, genuine consumers whilst staying real to rules of free speech. This a difficult task, one we urgently require to undertake.

This is an feeling and assessment short article, and the views expressed by the creator or authors are not essentially people of Scientific American.